Simple and seamless file transfer to Amazon S3 and Amazon EFS using SFTP, FTPS, and FTP

AWS Transfer for SFTP is a fully managed service which allows users to transfer (upload/download) files in and out of an S3 bucket. This video shows you how. With sftp.open ('/sftp/path/filename', 'wb', 32768) as f: s3.downloadfileobj ('mybucket', 'mykey', f) For the purpose of the 32768 argument, see Writing to a file on SFTP server opened using pysftp 'open' method is slow. For details, see my guide Setting up an SFTP access to Amazon S3. Mounting Bucket to Linux Server. As @Michael already answered, just mount the bucket using the s3fs file system (or similar) to a Linux server (Amazon EC2) and use the server's built-in SFTP server to access the bucket. Here are basic instructions: Install the s3fs.

The AWS Transfer Family provides fully managed support for file transfers directly into and out of Amazon S3 or Amazon EFS. With support for Secure File Transfer Protocol (SFTP), File Transfer Protocol over SSL (FTPS), and File Transfer Protocol (FTP), the AWS Transfer Family helps you seamlessly migrate your file transfer workflows to AWS by integrating with existing authentication systems, and providing DNS routing with Amazon Route 53 so nothing changes for your customers and partners, or their applications. With your data in Amazon S3 or Amazon EFS, you can use it with AWS services for processing, analytics, machine learning, archiving, as well as home directories and developer tools. Getting started with the AWS Transfer Family is easy; there is no infrastructure to buy and set up.

Sftp To S3 Bucket

The /n software SFTP Server is a highly configurable, high performing, and lightweight SSH file server. It is designed to provide users with rock-solid security and high throughput. The product provides a highly configurable yet very intuitive interface that can be set up in only a couple of minutes. WinSCP is a popular free SFTP and FTP client for Windows, a powerful file manager that will improve your productivity. It offers an easy to use GUI to copy files between a local and remote computer using multiple protocols: Amazon S3, FTP, FTPS, SCP, SFTP or WebDAV. Power users can automate WinSCP using.NET assembly.

Benefits

Easily and seamlessly modernize your file transfer workflows. File exchange over SFTP, FTPS, and FTP is deeply embedded in business processes across many industries like financial services, healthcare, telecom, and retail. They use these protocols to securely transfer files like stock transactions, medical records, invoices, software artifacts, and employee records. The AWS Transfer Family lets you preserve your existing data exchange processes while taking advantage of the superior economics, data durability, and security of Amazon S3 or Amazon EFS. With just a few clicks in the AWS Transfer Family console, you can select one or more protocols, configure Amazon S3 buckets or Amazon EFS file systems to store the transferred data, and set up your end user authentication by importing your existing end user credentials, or integrating an identity provider like Microsoft Active Directory or LDAP. End users can continue to transfer files using existing clients, while files are stored in your Amazon S3 bucket or Amazon EFS file system.

No servers to manage

You no longer have to purchase and run your own SFTP, FTPS, or FTP servers and storage to securely exchange data with partners and customers. The AWS Transfer Family manages your file infrastructure for you, which includes auto-scaling capacity and maintaining high availability with a multi-AZ architecture.

Seamless migrations

The AWS Transfer Family is fully compatible with the SFTP, FTPS, and FTP standards and connects directly with your identity provider systems like Active Directory, LDAP, Okta, and others. For you, this means you can migrate file transfer workflows to AWS without changing your existing authentication systems, domain, and hostnames. Your external customers and partners can continue to exchange files with you, without changing their applications, processes, client software configurations, or behavior.

Works natively with AWS services

The service stores the data in Amazon S3 or Amazon EFS, making it easily available for you to use AWS services for processing and analytics workflows, unlike third party tools that may keep your files in silos. Native support for AWS management services simplifies your security, monitoring, and auditing operations.

How it works

Get a hands-on understanding of how the AWS Transfer Family can help address your file transfer challenges by watching this quick demo.

Use cases

Sharing and receiving files internally and with third parties

Exchanging files internally within an organization or externally with third parties are a critical part of many business workflows. This file sharing needs to be done securely, whether you are transferring large technical documents for customers, media files for a marketing agency, research data, or invoices from suppliers. To seamlessly migrate from existing infrastructure, the AWS Transfer Family provides protocol options, integration with existing identity providers, and network access controls, so there are no changes to your end users. The AWS Transfer Family makes it easy to support recurring data sharing processes, as well as one-off secure file transfers, whichever suits your business needs.

Data distribution made secure and easy

Providing value added data is a core part of many big data and analytics organizations. This requires being able to easily provide accessibility to your data, while doing it in a secure way. The AWS Transfer Family offers multiple protocols to access data in Amazon S3 or Amazon EFS, and provides access control mechanisms and flexible folder structures that help you dynamically decide who gets access to what and how. You also no longer need to worry about managing the scale in growth of your data sharing business as the service provides built-in real-time scaling and high availability capabilities for secure and timely transfers of data.

Ecosystem data lakes

Whether you are part of a life sciences organization or an enterprise running business critical analytics workloads in AWS, you may need to rely on third parties to send you structured or unstructured data. With the AWS Transfer Family, you can set up up your partner teams to transfer data securely into your Amazon S3 bucket or Amazon EFS file system over the chosen protocols. You can then apply the AWS portfolio of analytics and machine learning capabilities on the data to advance your research projects. You can do all this without buying more hardware to run storage and compute on-premises.

Customers

Customers are using AWS Transfer for SFTP, AWS Transfer for FTPS, and AWS Transfer for FTP for a variety of uses cases. Visit the customers page to read about their experiences.

Transfer Family Blogs

No blog posts have been found at this time. Please see the AWS Blog for other resources.

To read more AWS Transfer Family blogs, please visit the AWS Storage blog channel.

What's New with Transfer Family

- date

Please see the AWS What's New page for recent launches.

AWS Transfer Family is designed to simplify file transfer operations for you. These capabilities make it possible.

Instantly get access to the AWS Free Tier,

including S3 storage for your files.

Get started building your SFTP, FTPS, and FTP services in the AWS Management Console.

In this article we will see how you can setup a FTP server on an EC2 instance that uploads/downloads the data directly from an Amazon S3 bucket. You can find out more about S3 buckets here: Amazon AWS – Understanding EC2 storage – Part III. To be able to do this we will need the s3fs utility. s3fs is a FUSE filesystem that allows us to mount with read/write access an Amazon S3 bucket as a local filesystem. The files are stored in S3 and other applications can access the files. The maximum file size is 64GB.

VMware Training – Resources (Intense)

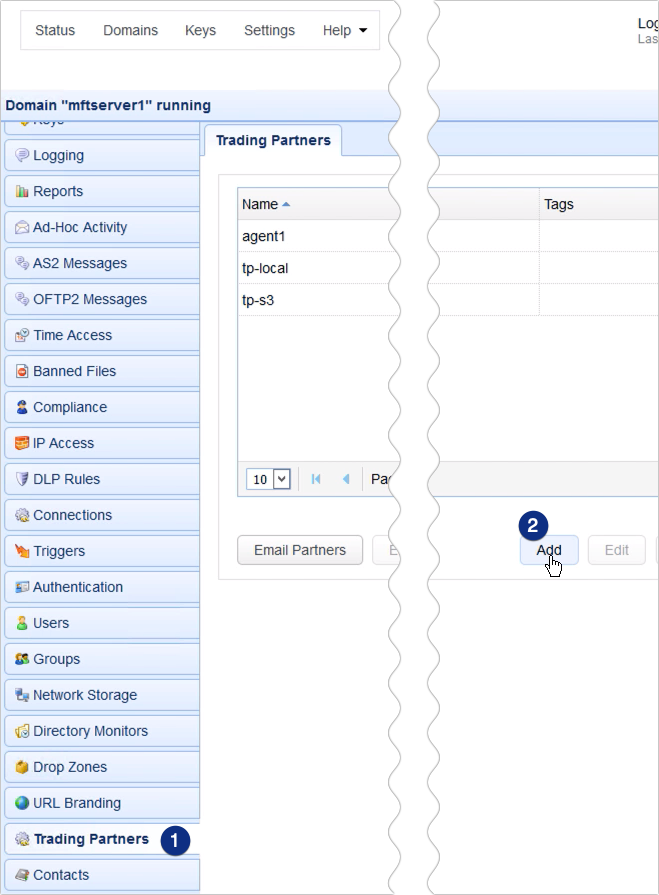

The steps to enable a FTP server where the files are stored in an Amazon S3 bucket are:

- Download s3fs software

- Specify the security credentials

- Install the FTP server and configure the users and server settings

Kyotesoft. So let's see how taking each of the above steps in detail does this.

S3 Sftp Server

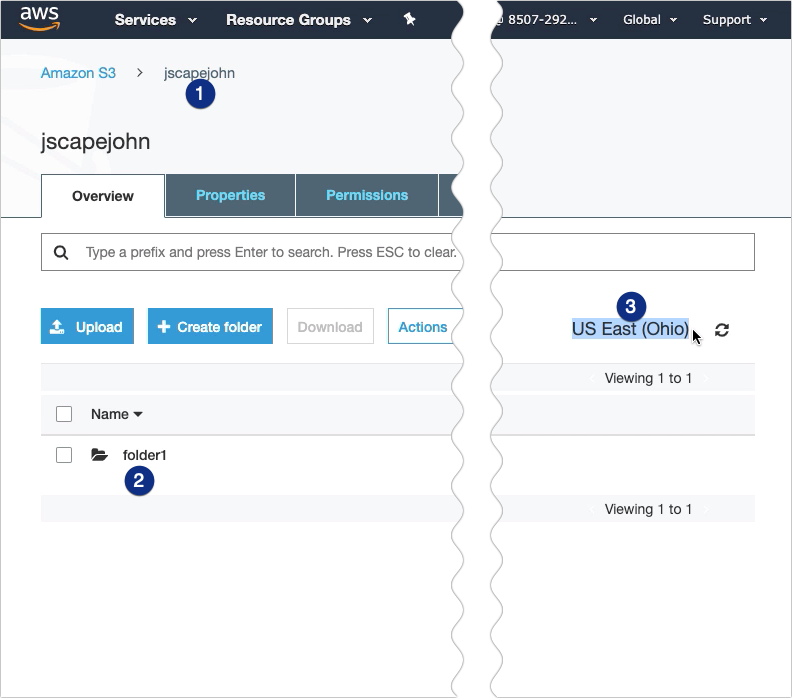

First we need to have the S3 bucket created. I have one created. The exact name of the bucket is needed, as it will be later referenced:

Also, I have one EC2 instance to which the S3 bucket will be attached:

There is one thing that should not be forgotten. You must allow inbound traffic for TCP ports 20-21 so that the FTP clients can connect to the FTP server. This security group attached to the EC2 instance should do the job:

Connect to the EC2 instance using the public IP allocated:

Login as root as we will execute multiple commands as root:

Install the packages that we are going to need to use fuse:

Download the software:

Decompress the archive to be ready for installation and move to the folder:

Start the installation process by running './configure':

Next compile the programs and create the executable by running the 'make' command:

Finish the installation where the executable and other required files created in the previous step are copied to the required final directories:

Next configure the AWS credentials. We will create the file that will be our s3f3 password file, configure the proper permissions and then put in the password file the AWS credentials. The s3fs password file has this format: accessKeyId:secretAccessKey:

Next create a folder where the S3 bucket will be mounted:

And mount the S3 bucket:

Let's confirm that the S3 bucket was mounted:

Install the FTP server:

Cudatext 1 83 1. Add a user and set the home directory(in this case, the home directory will be the directory where the S3 bucket will be mounted). Disable the login ability for the new user added. The user will still be able to login to FTP, but it will not be able to login to the shell of the EC2 instance where the FTP server is running:

Change Ftp To Sftp

Add a password for the user: Cd booklet maker software.

Configure the FTP server by editing the file and adding this content:

S3 Sftp Server Ip

Start the FTP server:

Now let's connect to the FTP server from a server from the Internet and upload a file there:

The file should now show up in the S3 bucket:

To read more AWS Transfer Family blogs, please visit the AWS Storage blog channel.

What's New with Transfer Family

- date

Please see the AWS What's New page for recent launches.

AWS Transfer Family is designed to simplify file transfer operations for you. These capabilities make it possible.

Instantly get access to the AWS Free Tier,

including S3 storage for your files.

Get started building your SFTP, FTPS, and FTP services in the AWS Management Console.

In this article we will see how you can setup a FTP server on an EC2 instance that uploads/downloads the data directly from an Amazon S3 bucket. You can find out more about S3 buckets here: Amazon AWS – Understanding EC2 storage – Part III. To be able to do this we will need the s3fs utility. s3fs is a FUSE filesystem that allows us to mount with read/write access an Amazon S3 bucket as a local filesystem. The files are stored in S3 and other applications can access the files. The maximum file size is 64GB.

VMware Training – Resources (Intense)

The steps to enable a FTP server where the files are stored in an Amazon S3 bucket are:

- Download s3fs software

- Specify the security credentials

- Install the FTP server and configure the users and server settings

Kyotesoft. So let's see how taking each of the above steps in detail does this.

S3 Sftp Server

First we need to have the S3 bucket created. I have one created. The exact name of the bucket is needed, as it will be later referenced:

Also, I have one EC2 instance to which the S3 bucket will be attached:

There is one thing that should not be forgotten. You must allow inbound traffic for TCP ports 20-21 so that the FTP clients can connect to the FTP server. This security group attached to the EC2 instance should do the job:

Connect to the EC2 instance using the public IP allocated:

Login as root as we will execute multiple commands as root:

Install the packages that we are going to need to use fuse:

Download the software:

Decompress the archive to be ready for installation and move to the folder:

Start the installation process by running './configure':

Next compile the programs and create the executable by running the 'make' command:

Finish the installation where the executable and other required files created in the previous step are copied to the required final directories:

Next configure the AWS credentials. We will create the file that will be our s3f3 password file, configure the proper permissions and then put in the password file the AWS credentials. The s3fs password file has this format: accessKeyId:secretAccessKey:

Next create a folder where the S3 bucket will be mounted:

And mount the S3 bucket:

Let's confirm that the S3 bucket was mounted:

Install the FTP server:

Cudatext 1 83 1. Add a user and set the home directory(in this case, the home directory will be the directory where the S3 bucket will be mounted). Disable the login ability for the new user added. The user will still be able to login to FTP, but it will not be able to login to the shell of the EC2 instance where the FTP server is running:

Change Ftp To Sftp

Add a password for the user: Cd booklet maker software.

Configure the FTP server by editing the file and adding this content:

S3 Sftp Server Ip

Start the FTP server:

Now let's connect to the FTP server from a server from the Internet and upload a file there:

The file should now show up in the S3 bucket:

Now, let's unmount the S3 bucket and confirm that no file can be uploaded to the FTP server:

The file cannot be uploaded:

So that would be all. Now you should know how to mount an Amazon S3 bucket as a local filesystem to an EC2 instance. The advantages of using S3 buckets as storage are that S3 storage is pretty inexpensive and it is designed for 99.999999999% durability of the objects.

Reference